It intrigues me that people are still obsessed with finding the differences between SRE and DevOps and about the role they play in Digital Transformation. Hence this blog.

To be Digital, there is a need to look at 4 quadrants towards driving continuous improvements

1.

People – Our Employees – How do we “Really”

empower our People assets, and how do we get their DISCRETIONARY EFFORTS kicking

in.

2.

Customer – How do we understand the

Customer intent even before our competitor does, how can we hyper-personalize offers

so that customers see the best VFM (value for money) and come back for a REPEAT BUSINESS.

3.

Optimizing Operations – This is where the

adoption of Automation/DevOps flows/360-degree Security/Site Reliability Engineering/

Elastic Infrastructure/ Containerization/Microservice Architecture etc. comes in.

The underlying key tenets are to think granular, and to have the ability to deliver

quicker and revert quicker in a fail-safe, blameless cultural environment. This

is not about some piecemeal 3 month-consultant projects, but rather an in-depth

Organizational cultural wholesome change movement; great Leadership and a sharp intent, and an ability to grasp several aspects of the full picture are required.

4.

Transforming the Service or Product – This

is to use “Data” as the key asset to derive clear insights of the customer, this

is about the Organizational culture becoming bolder and innovative. building partnerships

to leverage ecosystems, failing fast, failing safe, having a test and run

approach, learning, adapting, and continuously improving to deliver Cheaper, Faster, and Better.

Site Reliability

Engineering (SRE) and DevOps share the goal of building a bridge between

development and operations towards increasing higher Business Value.

·

SRE

and DevOps share the same foundational principles.

·

SRE

can be viewed as a specific implementation of DevOps.

·

They

share the same goal of rapidly delivering reliable software.

What is SRE?

SRE, or site reliability engineering, is a

methodology developed by Google engineer Ben Treynor Sloss in 2003. The goal of

SRE is to align engineering goals with customer satisfaction. Teams achieve

this by focusing on reliability. SRE is an implementation of DevOps, a

similar school of thought. Google is also

responsible for bringing these two methods together. In this

article, we'll break down more of what this looks like in practice.

SLIs and SLOs

Reliability is a subjective quality based

on your customers’ experiences. SRE allows you to measure how happy your

customers are by using SLIs. SLIs, or service level indicators, are

metrics that show how your service is performing at key points on a user

journey. SLOs then set a limit for how much unreliability the

customer will tolerate for that SLI.

Incident response

SRE teaches us that 100% uptime is

impossible. Some amount of failure is inevitable. Because of that, incident

response is a core SRE best practice. Responding to incidents faster reduces

customer impact. But, you need the processes in place to enable this. There are

many components to incident response, including:

- Incident classification: Sort incidents into categories-based

severity and area affected. This allows you to triage incidents and alert

the right people.

- Alerting and on-call systems: Determine people

available to respond to incidents as needed. Set guidelines for who gets

called and when. Make sure to balance schedules and be compassionate.

- Runbooks: These are documents that guide responders

through a particular task. Runbooks are particularly useful for incident

response. They include things to check for and steps to take for each

possibility. They’re made as straightforward as possible to reduce

toil. Automating runbooks can reduce toil further.

- Incident retrospectives: SRE advocates learning as much

as possible from each incident. Retrospectives document timelines, key

communications, resources used, relevant monitoring data, and more. Review

these documents as a group. Use them to determine follow-up tasks or revise

runbooks and other resources.

Error budgeting

Nobody expects perfection. Some amount of

unreliability is acceptable to your customers. As long as your performance

meets your SLO, customers will stay happy with your services. The wiggle room

you have before your SLO is breached is the error budget.

Your error budget can help you make decisions

about prioritization. For instance, services with lots of remaining error

budget can accelerate development. When the error budget depletes, teams know

it's time to focus on reliability. Through this decision-making tool, SRE

allows operations to influence development in a way that reflects customer

needs.

SRE culture

The cultural changes of SRE are as important

(if not more) than the process changes. The cultural lessons of SRE include:

- Blamelessness:

When something goes wrong, it is never the fault of an individual. Assume

that everyone acts in good faith and does their best with the information

available to them. Work together to find systemic causes for the incident.

- Psychological safety:

Teammates feel secure. They should be comfortable raising issues and

expressing concerns without retributions. This encourages creativity,

curiosity, and innovation.

- Celebrating failure: Incidents

aren’t setbacks, but unplanned investments in reliability. By experiencing

an incident and learning from it, the system becomes more resilient.

What is DevOps?

DevOps is a set of practices that connects

the development of software with its maintenance and operations. Its name

reflects these two parts: Development and Operations. DevOps

originated from a collection of previous practices. These include the Agile development system, the Toyota Way,

and Lean manufacturing. The term DevOps became well-known in the

early 2010s.

The primary goal of DevOps is to reduce the

time between making a change in code and that change reaching the customers,

without impacting reliability. It seeks to align the goals of development with organizational

needs to create business value. In this way, the goals of SRE and DevOps are

very similar. Both focus on customer impact and efficiency. But, the methods

they use to achieve this vary.

Continuous Deployment

DevOps seeks to increase the frequency of new

deployments of code. Faster, more incremental changes allow a more attuned

response to customer needs. It also reduces the chance of major incidents

caused by large, infrequent deployments.

Collaboration between development and operations

A core tenet of DevOps is to remove silos

between development and operations teams. Rather than development “throwing

code over the wall” for operations to handle, the teams work together

throughout the service’s lifecycle.

Here are some DevOps practices that encourage

cooperation between development and operations:

- Alignment on goals: Ensure

both teams understand what they’re working towards. Shared roadmaps and

agreed-upon metrics help with alignment. Use customer impact as a common

priority.

- Develop with operations in mind:

Development and operations should collaborate on how development should

proceed. Operations make suggestions that help them maintain the code in

production.

Availability of data and resources

Monitoring data for DevOps is a big deal.

DevOps advocates measuring valuable data and using it as your basis for

decision-making. By default, data should be accessible across the organization.

Simply having a lot of data available isn’t

enough to make good decisions. Metrics should be contextualized to

provide deeper insights. Make sure that you're setting up monitoring that helps

you learn about your system. Having too much data can actually

make decision making more difficult.

Automate where possible

Like SRE, DevOps advocates for automating

wherever possible. Where SRE focuses on automating to increase consistency and

reduce toil, DevOps automates to tighten the development cycle. By removing

manual steps in testing and deployment, teams can achieve a faster release

frequency.

How SRE connects to DevOps

You can implement both DevOps and SRE into

your organization. A helpful way to combine the methodologies is to consider

SRE as a way to achieve the goals of DevOps. But SRE is much more than development

and deployment automation, its about working in a continuum of the system

ecosystem to deliver operational excellence and increased reliability. Focusing

on the goals of DevOps instead of the process-focused approach of SRE is also

helpful. Drawing from both methodologies as appropriate provides the best way

forward.

SRE as an implementation of DevOps

SRE is a method of implementing the goals of

DevOps. Here are some of the common goals of DevOps, and how SRE practices can

help achieve them:

- Remove silos: SRE achieves this

by creating documentation that the entire organization can use and learn

from. Lessons from incidents are fed back into development practices

through incident retrospectives.

- Change gradually: SRE advocates

incremental rollouts and A/B testing. This effectively makes the change

more gradual, achieving the same goal of reducing the impact of failure.

- Use tools and automate: many SRE

tools reduce manual toil. Whenever you automate or simplify a process, you

reduce toil and increase consistency. You also accelerate the process,

achieving DevOps goals.

- Metric-based decisions: SRE

practices encourage monitoring everything and then constructing deep metrics. These will give you the insights you need

to make smart decisions.

- Accept failure: Not only does SRE

accept failure, it celebrates it and utilizes it. By strategically using

error budgets, you can accelerate development while maintaining

reliability.

DevOps determines what needs to be

done, whereas SRE determines how it will be done. DevOps captures a

vision of a system that is developed efficiently and reliably. SRE builds

processes and values that result in this system. You can establish your goals

using DevOps principles, and then implement SRE to achieve them.

SRE vs. DevOps philosophy

SRE and DevOps share many philosophies and

principles. Some that they share include:

- Placing value on collaboration

across teams, particularly between development and operations

- Automation and toil reduction are

key to increasing consistency and helping humans

- Improvement is always possible.

There is always value in reviewing and revising policy

- Customer satisfaction is the most

important concern. It’s the motivator for developing

quickly and reliably

- Sharing knowledge, whether

through monitoring data, incident retrospectives, or codified best

practices, is key to making good decisions

- Failure is inevitable, and

something to embrace and learn from

However, SRE and DevOps also have some

differences in philosophy. Often these come down to priority. Some differences

include:

- DevOps advocates for a fluid

approach to problem-solving. SRE creates codified and consistent

processes.

- SRE implements practices such

as chaos engineering to further increase reliability.

DevOps is more focused on the development lifecycle, so these extra

practices don’t typically emerge.

- SRE generally advocates for lower

risk tolerance than DevOps. Working under metrics like SLOs, SRE will implement policies such as code freezes to

avoid a breach. DevOps is more comfortable adjusting standards of

reliability as development requires.

- DevOps usually operates with

improving development speed as a primary goal. SRE considers increased

development velocity a byproduct of error budgeting and better incident

response.

- Both SRE and DevOps have a major

focus on automation, but SRE’s approach is more widespread. DevOps

primarily automate to increase development speed and focus on steps in

the development cycle. SRE automates any processes it can, from chaos

tests to incident management.

SRE vs DevOps teams

When implementing either SRE or DevOps in

your organization, you’ll need to consider how these changes will actually take

place. Will you:

- Build policies and procedures

collaboratively and rely on everyone to follow them?

- Assign implementation duties to

particular engineers in addition to their normal tasks?

- Reallocate engineers to be on a

team wholly devoted to rolling out new procedures?

- Hire new engineers to build out

your implementation team?

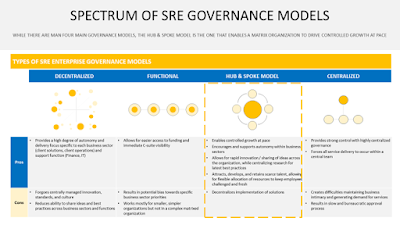

Structures for SRE and DevOps teams

Both DevOps and SRE teams vary based on how

centralized they are. At one end is a centralized team, which creates

tools, infrastructure, and processes that the entire organization shares.

The other extreme is a distributed team.

DevOps/SRE engineers are assigned to individual teams and projects. They handle

maintaining the reliability and velocity goals for each team.

In conclusion, depending on the maturity of your organization and your needs, different approaches will be more efficient. You should consider how you want to structure your DevOps and SRE teams but the big picture around the 4 quadrants of Digital Transformation towards Value creation, People, Customers, Operations, and Services remains the central theme of such transformations.